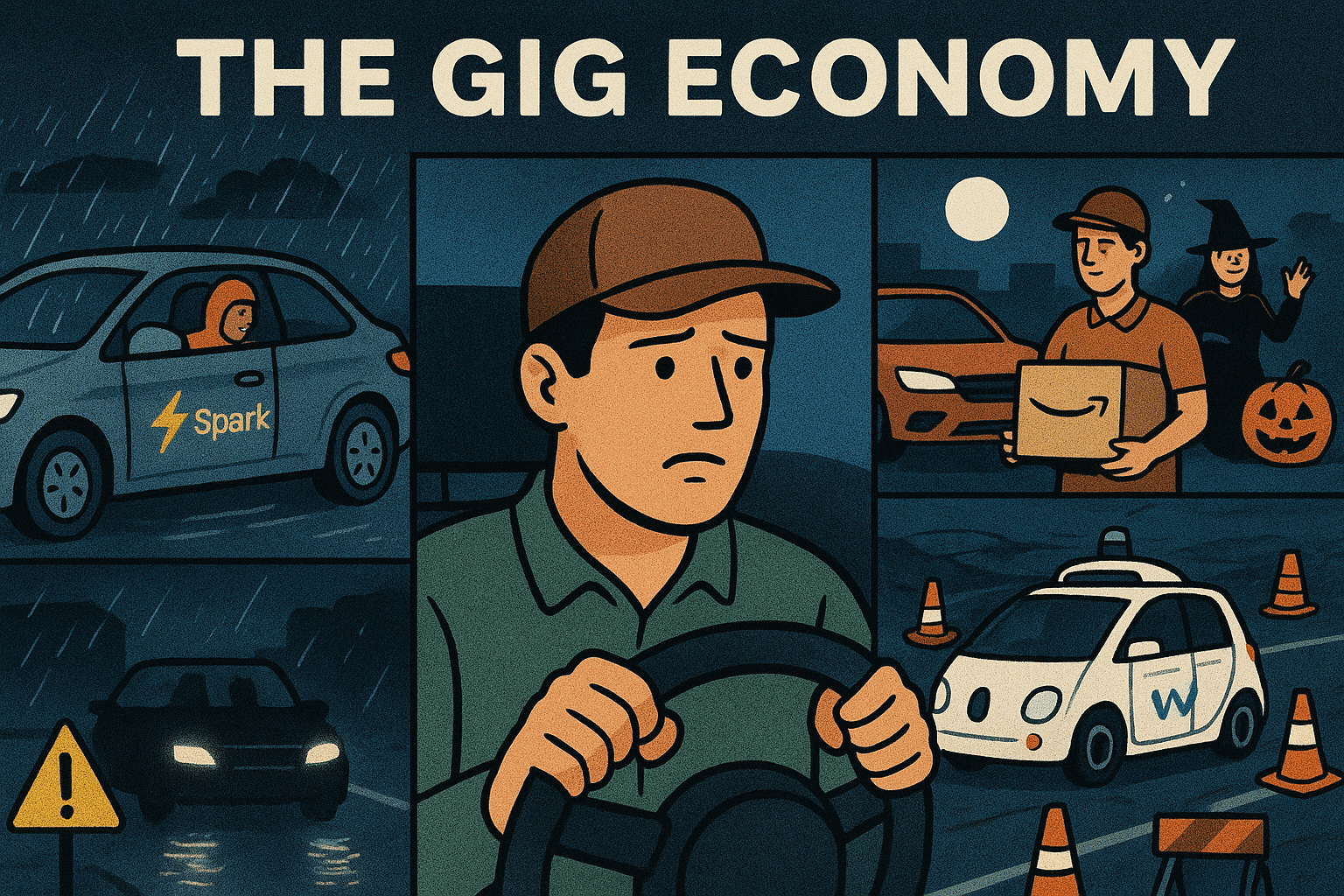

The week pulled us across the full map of the modern gig economy: slowdowns on Spark and wins on Amazon Flex, Halloween ride patterns, late-night safety, and the relentless march of autonomous vehicles. The tension felt everywhere is hard to miss. On one side, drivers weigh weather, fatigue, and shrinking incentives while hunting profitable windows. On the other, platforms tout AI, automation, and growth. That clash shapes every decision—whether to accept a lowball route from the couch, to risk rain-glare at 1 a.m., or to hedge with multi-apping. We unpack how drivers adapt while platforms optimize, and why these micro-choices add up to the future of local transport.

A standout thread is how events and seasons bend supply and demand. Halloween lifted ride volume with cheerful riders, predictable cues, and steady but not spectacular surge, proving again that vibe matters as much as price. Yet rain and night driving cut visibility, spike stress, and shrink margins of error. Those conditions hit school bus routes, rideshare, delivery, and Flex alike. The takeaway for drivers is to plan around weather windows, set a line for minimum pay that actually moves you off the couch, and leverage rest reminders to avoid lockouts. For riders and cities, it’s a nudge to improve lighting, temporary signage, and pick-up zones that reduce chaos on busy nights.

Autonomy loomed large with Waymo announcing new city launches and ambitious weekly trip targets. The promise is safer, cheaper, more available mobility, especially for late-night or underserved areas. But the messy reality surfaced in a clip where a Waymo tried to pass through a construction zone and wandered toward oncoming traffic. Cones, lane shifts, and ambiguous detours are where edge cases pile up. That’s why transparency from companies matters: admit limits, restrict complex zones, and log interventions. Regulators need to accelerate clear rules for liability, data retention, and standardized incident reporting. Without those, every misstep becomes a referendum rather than a lesson.

Uber’s AI ambition adds another layer. Drivers can now earn small amounts completing microtasks that train models. It’s incremental income, but also the long shadow of training your replacement. If platforms want trust, they should publish task pay ranges, model usage, and how human contributors are credited. Meanwhile, Lyft piloted late-night campus discounts that doubled evening rides in Ann Arbor, a targeted nudge aligned with public safety and student wallets. These are the kinds of partnerships that create immediate, local wins while the longer-term tech bets mature.

On the human side, we saw both heart and headaches. An 86-year-old rideshare driver in Fiji donates earnings to educate girls—proof that gig work can carry meaning beyond miles. A DoorDash PIN handoff showed why drivers must enforce verification even when customers are frustrated; one false “not delivered” can tank ratings and pay. And an Instacart swap that turned hoagie rolls into potatoes underlined a basic lesson: read the label, match the photo, and confirm substitutions. Small errors become big when trust is thin.

The throughline is control—over time, risk, and information. Drivers who thrive are deliberate: set minimum pay floors, track weather and events, pause when visibility drops, and screenshot every key screen. Riders benefit from being precise with PINs, instructions, and pickup locations. Platforms earn loyalty when they design for edge cases, share model limits, and keep incentives honest. Autonomy will arrive, and it will save lives net-net, but it must be rolled out with humility, data, and clear accountability. Until then, it’s still a human craft: navigating nights, cones, and choices, one trip at a time.