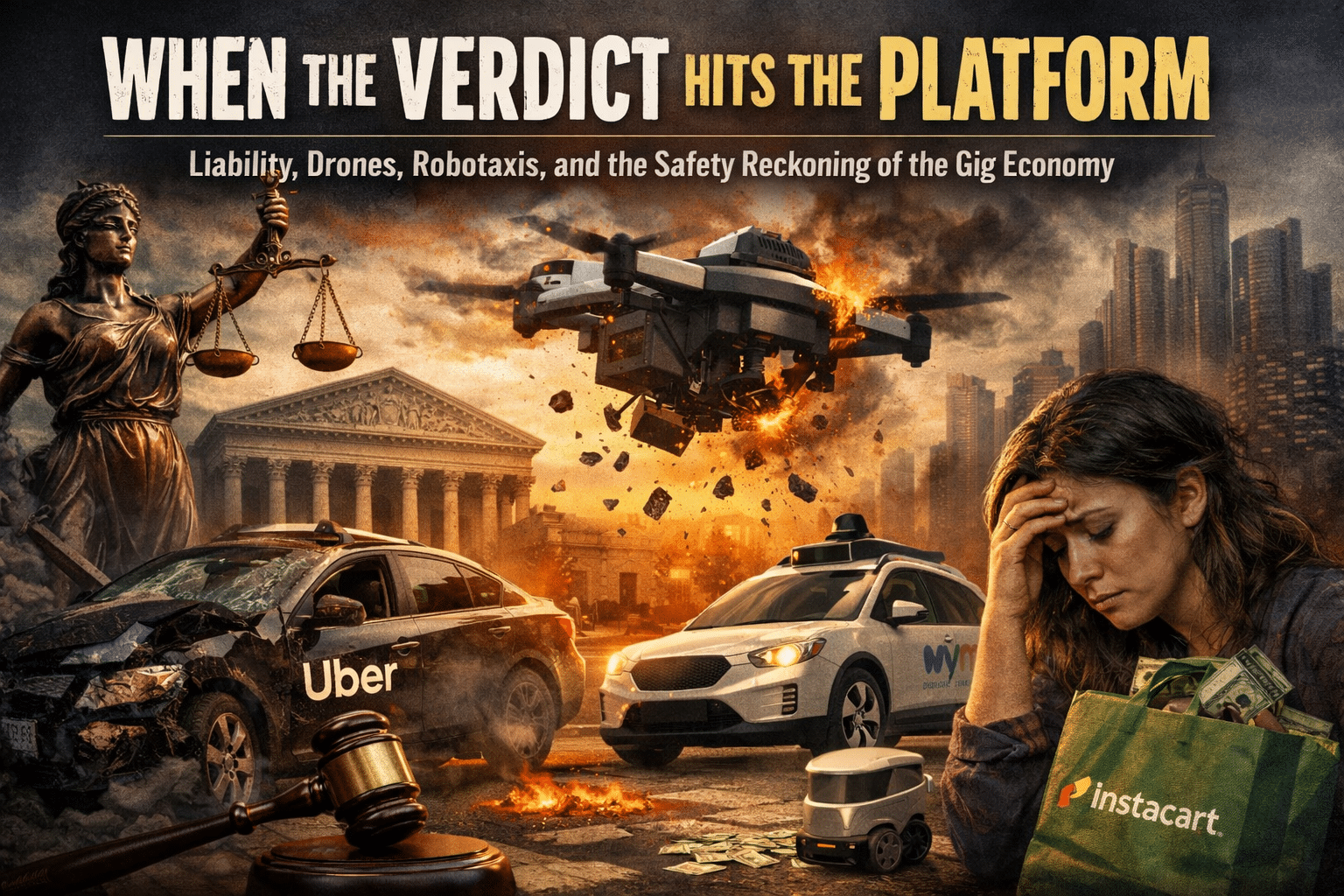

The gig economy sits at an awkward crossroads where legal theory, human harm, and fast-moving tech collide. Two court rulings anchor the moment: a jury ordering Uber to pay $858.5 million in a sexual assault case under “apparent authority,” and another awarding nearly $16 million against Instacart after an inattentive driver fatally struck a man. These aren’t just headlines; they signal a shift in who pays when things go wrong. If platforms present contractors as their face to the customer, juries may decide they share the risk. For victims, that matters—individual drivers rarely have assets or insurance limits that meet the scale of harm. For platforms, it could force overdue investment in prevention rather than PR.

Safety isn’t one story; it’s a stack. Sexual assaults, assaults on drivers, and carjackings have become so common that many creators no longer cover every case to avoid numbing listeners. Yet ignoring them helps no one. The lesson is clearer protocols and tech: always-on dual-facing dash cams subsidized by platforms, faster background check loops with real-time flags, survivor-centered reporting that doesn’t retraumatize, and transparent safety metrics. If liability rises, safety will follow the money. But we should separate preventable misconduct from accidents. Distracted driving is a choice; timelines and app nudges don’t force taps at 40 mph. Design should reduce temptation, but accountability must still live with the human behind the wheel.

Tech adds its own chaos. A viral clip shows a large delivery drone failing midair near an apartment window, debris flying and smoke billowing. At that mass and speed, it’s a lethal object, not a gadget. Meanwhile, Waymo admits “fleet response” agents—some abroad—provide guidance to stuck robotaxis while the system retains control. The nuance matters, but public trust hinges on who is really deciding when edge cases appear. If remote advisors nudge a vehicle into a risky maneuver, regulators will want logs, not assurances. And on sidewalks, Tennessee may double personal delivery device speeds to 20 mph. That’s dangerous territory when four-foot-tall robots meet strollers, seniors, and dogs in tight spaces.

The strangest subplot is how platforms stretch into unintended uses. A driver finds pills hidden in a hollowed hamburger bun for a courier run to a motel. That’s not convenience; that’s risk. The right play is immediate police contact and screenshots of the chain—sender, route, and chat. Support scripts offering $15 to bring contraband to a precinct don’t fix a system that can turn any courier into a mule. Policy needs to block suspicious package handoffs at doors, require verifiable pickup sources, and freeze accounts flagged by multiple drivers. If algorithms optimize for completed tasks over verified safety, they’ll invite the worst behavior to scale.

Even lighter moments underline serious questions. A rider places a parakeet on a Waymo’s steering wheel and gets a stern terms-of-service warning. It’s funny and harmless, but it shows how thin the membrane is between novelty and hazard when autonomy meets humans. Elsewhere, claims of 100,000 trips spark a different debate: sustainability. If you truly live on the road, EVs make economic sense—lower routine maintenance, no oil changes, regenerative braking, and predictably lower fuel costs. The downside is higher repair bills for rare failures, but for high-mileage gig workers, total cost of ownership often favors electric. Policy nudges and platform incentives could accelerate that shift and cut operating risk.

Across these threads, three priorities emerge. First, tighten platform responsibility where branding, onboarding, and customer promises clearly imply agency. Second, invest in preventive safety tech and processes that respect both riders and drivers, with clear evidence trails. Third, slow down sidewalk autonomy and air delivery until failure modes are rare and survivable. The gig economy thrives on speed, but trust is built on restraint, transparency, and care for the people doing the work. If verdicts force that recalibration, we may finally see safety treated as a feature, not a footnote.